Have you heard of the “replication crisis”? It’s mainly a thing in the social sciences, and it’s just what it sounds like: when researchers try to replicate results from previous studies, they can’t. Vast swaths of recent social science research appear to be completely bogus.

But what about medical science? That’s a whole different thing: it’s a hard science that has always demanded high standards because people’s lives can be at stake. This doesn’t mean that every study always pans out, but it does mean that it’s not susceptible to the kind of widespread bullshit crisis currently roiling the social sciences.

But what if it is? Here’s the nickel version of a story from the world of medical testing: Back in the 90s, a bunch of studies were done that showed promising results in the search for genes that are associated with depression. This year, a gigantic new study came out that basically overturned all of it.

Put like that, it doesn’t sound so bad. That’s how science works, after all. But if you sit still for the longer version, it starts to sound a lot worse.

First, here’s the background. Back in the 90s, before the rise of cheap, wide-scale genetic testing, the search for genetic associations was fairly simple. You’d put together a group of people—a few hundred or so—and test them all for the presence of a specific target gene. Then you’d compare that to the presence of depression, usually as a score on some kind of diagnostic test. If you found an association that was statistically significant, you’d conclude that changes in the target gene affected depression and would make a good subject of further research.

Quite a few of these targets were discovered. In particular, one of them, called 5-HTTLPR, became something of a star. The initial studies didn’t find too much, but then researchers started doing follow-up studies that checked to see if 5-HTTLPR became associated with depression if it was triggered by some kind of environmental cue. It turned out that it did. In fact, specific alleles of 5-HTTLPR appeared to be responsible for producing depression not in everyone, but specifically in people under stress. It also appeared to be associated with insomnia, anxiety, SAD, Alzheimer’s, and a variety of other things.

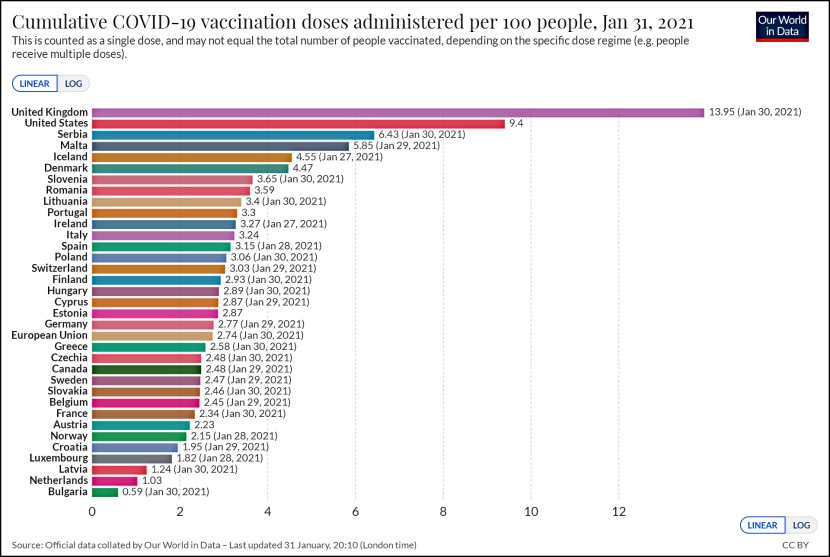

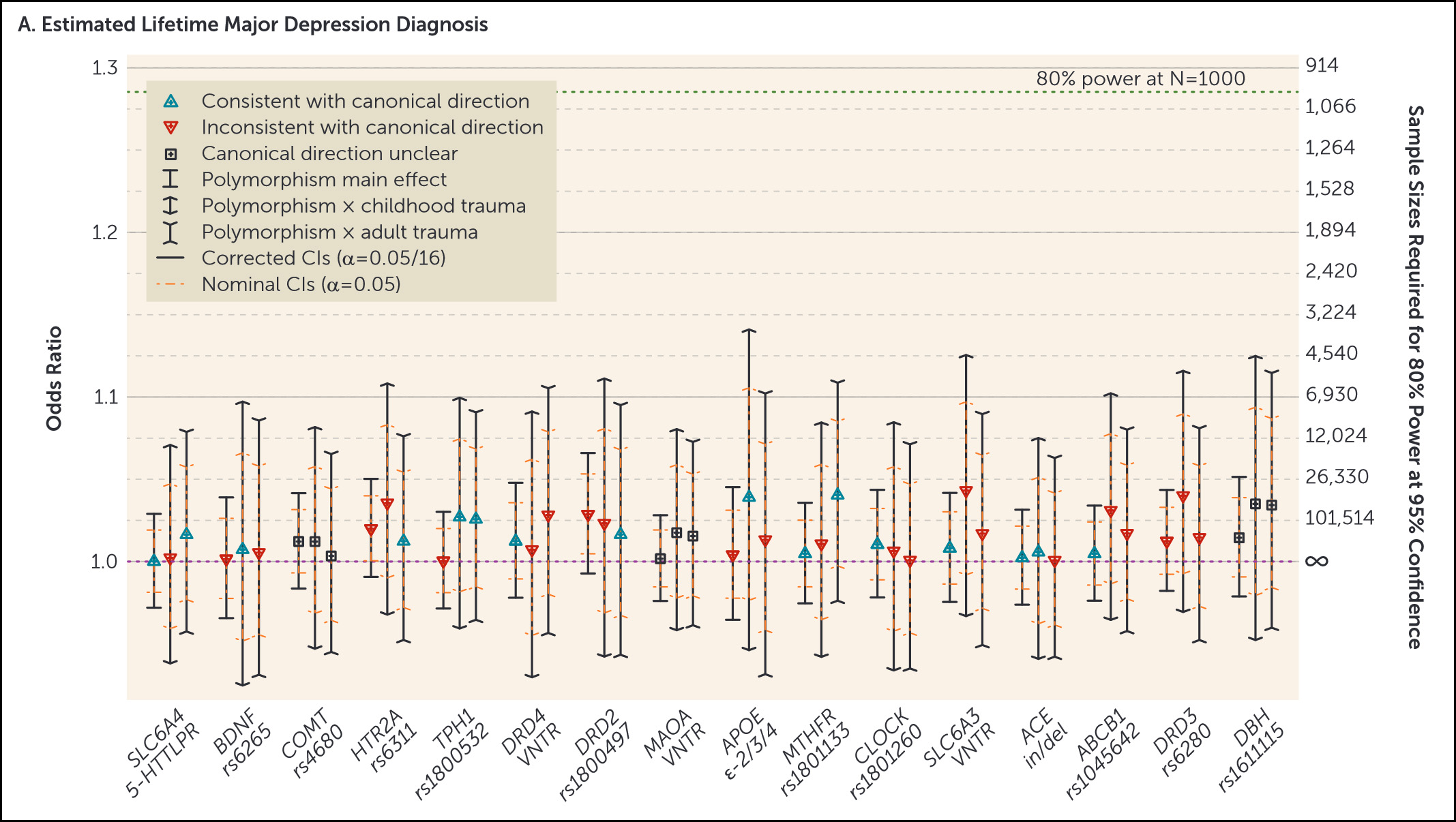

But there was a problem: recent research has shown that it’s wildly unlikely for a single gene variant to have a detectible impact on a complex disorder like depression. So a new study was done not just on 5-HTTLPR, but on all the other genes that were associated with depression, using modern tools that could work on samples of hundreds of thousands. Here are the results:

Don’t worry about the details of this chart. What’s important is (a) all of the red and blue triangles are close to 1.0, and (b) all of the confidence intervals cross 1.0. This means that (a) the effects of these gene variants are tiny, and (b) they’re so tiny we can’t say they really exist at all. There’s certainly no way that researchers with sample sizes of only a few hundred people had even a remote chance of discovering these effects, assuming they ever existed in the first place.

This all comes via Scott Alexander, a psychiatrist who writes at Slate Star Codex. He explains why this is even worse than you think:

What bothers me isn’t just that people said 5-HTTLPR mattered and it didn’t. It’s that we built whole imaginary edifices, whole castles in the air on top of this idea of 5-HTTLPR mattering. We “figured out” how 5-HTTLPR exerted its effects, what parts of the brain it was active in, what sorts of things it interacted with, how its effects were enhanced or suppressed by the effects of other imaginary depression genes. This isn’t just an explorer coming back from the Orient and claiming there are unicorns there. It’s the explorer describing the life cycle of unicorns, what unicorns eat, all the different subspecies of unicorn, which cuts of unicorn meat are tastiest, and a blow-by-blow account of a wrestling match between unicorns and Bigfoot.

This is why I start worrying when people talk about how maybe the replication crisis is overblown because sometimes experiments will go differently in different contexts. The problem isn’t just that sometimes an effect exists in a cold room but not in a hot room. The problem is more like “you can get an entire field with hundreds of studies analyzing the behavior of something that doesn’t exist”. There is no amount of context-sensitivity that can help this.

A whole lot of scientists appear to have gotten on the bandwagon and figured out all the details of a thing that didn’t actually exist in the first place. How did that happen? It’s a good question, and it provides some evidence that it’s not just the social sciences that are susceptible to lousy studies.¹

There are plenty of other problems with medical and pharmaceutical testing, not least of which is the habit of hiding all the studies that turn out to be negative for your particular drug. But the 5-HTTPLR debacle suggests that things go much deeper than this. The replication crisis has already told us that there’s a lot of shoddy work going on, and apparently that shoddy work goes well beyond the social sciences.

¹This fiasco also provides some additional support for those who think the whole p<.05 standard for statistical significance is way too lax. In theory, meeting that standard means there’s only a 5 percent possibility that your results were just due to chance, but in reality it seems to suggest more like a 20 or 30 or 40 percent possibility.²

²Yes, yes, I know that p levels are more complicated than that. But this is the basic textbook definition, and it’s good enough for a blog post. Besides, the experts still can’t decide among themselves how p should be defined, and until they do there’s not much point in my taking a side.